Neural networks are used for many different tasks, but have you ever heard of a butterfly recognition AI? We neither, so the opportunity to work on this project was very exciting, and it was an interesting ride.

Butterflies have had millions of years of evolution to blend in perfectly with their environment to avoid predators and, in this case, insect enthusiasts, making it harder for them to admire wildlife. While observing butterflies in real life can be problematic, monitoring them through a video camera from the comfort of your home is a lovely way to enjoy our winged friends, especially if AI removes the need for looking through hours of video footage and sends you alerts when a butterfly has entered the camera’s view, automatically detecting what butterfly species it is.

There are two parts to the butterfly AI:

- object detection - a neural network in charge of detecting the presence of a butterfly or a different insect

- object classification - a neural network in charge of determining which butterfly or insect species was captured

When the object is detected, the system records a short video, which can then be accessed by the end-user, and takes a snapshot to send to a server where a classification model detects what type of butterfly or an insect decided to bless the user with its presence.

Low-quality Cameras For Object Detection

To start with, our project had to work using any webcam. This is where we ran into the first problem: low-quality cameras, as they are the most affordable and the most widespread. While the ‘no camera limit’ is great for end-users, it presented a challenge for us, as the object detection model runs using the camera chipset.

Where someone gets a good deal, others get the short end of the stick, in this case ‘others’ being our CV developers. Working with a cheap camera means working with a cheap chipset which makes it impossible to use the default neural network architecture. Compared to a top-of-the-line, gold standard of computer vision video cameras - NVIDIA Jetson Nano - which allows using around 120 layers of default YOLO v4, the cameras we had to work with allowed only for 22 layers.

Where a full YOLO v4 neural network provides great recognition results, a stripped-down version performs poorly. We have tested both and were unpleasantly surprised with how low the model depth was when running it using a cheap chipset.

Testing Default YOLO v4 vs Reduced

We started with training the default YOLO v4 model and testing it on the customer’s dataset The results we achieved were satisfactory - 95% mAp, which in the world of computer vision is more than enough to launch a mode into production.

After retraining the model to fit the camera’s parameters, the detection quality dropped significantly. But where machines fail humans advance.

We have tested the neural network on test data and visually evaluated False Positives and False Negatives. This highlighted where the network lacked knowledge and where it made the most mistakes.

Buggin' Out: False Positives

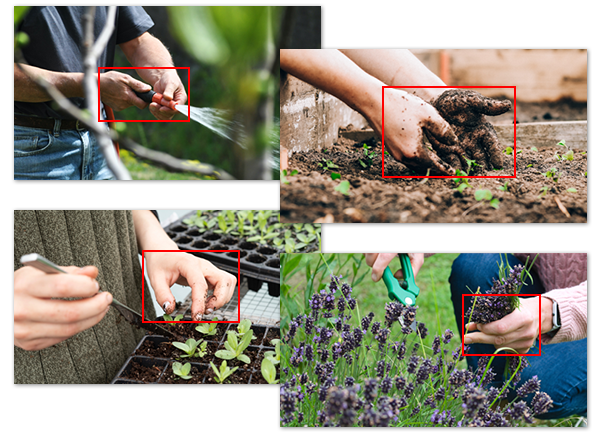

Networks are like children - they struggle a lot until you explain how something is done, and our cybernetic child struggled with determining if a butterfly, or a human's hand, has entered the camera's view. While the whole 'networks are like children' analogy is cute, the end-user is more interested in looking at butterflies than their neighbors' hands, so we had to work on teaching the network to ignore people and focus on insects instead.

To do this, we have added negative examples, like pictures of people at various angles as well as human hands.

Like Moths To Flames: Object Detection At Night

Butterfliews are pretty and all, but have you seen how pretty moths can be? Moths are nocturnal and only show up at nighttime, which posed an additional challenge of making the system work at night as accurately as it does during the day. During the daytime, cameras produce full-color images, and at night they produce black and white images using infrared mode. When the camera switched to infrared, the model produced a lot of false positives:

- tree leaves moving in the wind

- dust and other debree floating in the air

- fountains

Users would be less than happy to be woken up by a notification, get excited to look at a rare moth or a beetle, but end up looking at a recording of a leaf stuck to a camera lens.

In order to reduce sleep interruptions to a minimum, we collected instances of false positives in nighttime settings and marked them up by hand.

Instagram vs Reality: On The Importance of A Dataset

Ever heard of social media being called a ‘highlight reel’ where people present the best version of themselves? Who knew the same can be true for insects. Photos of butterflies, moths and other insects that can be obtained from open sources, like Google Images and Youtube videos, are usually high quality, very sharp, and depict specimens at their best, with nothing in between the insect and the camera obstructing the view.

Reality is not always as pretty. Cameras produce low-quality images that can make it hard to understand what’s going on even for a human eye, bad weather conditions like rain, snow, or dust can obstruct the view, and we are sure butterflies sense when someone wants to capture them and position themselves in the most ridiculous way possible.

The dataset that our client has provided (consisting of shart images found on the Internet) was not of very much use for this project. We needed to collect a set of images of butterflies in real conditions using the client’s cameras to show the model what butterflies really look like, and not how they are presented on social media.

What We Have Now

So, after doing all of the above:

- manually going through every instance where the network was wrong

- teaching the network to ignore people and not detect them as insects

- working with black and white images and false positives

- collecting a dataset of butterflies in real-life conditions, under various angles, and in different weather conditions

We have managed to achieve a 97,5% mAP for object detection. This is a very high result for a computer vision model as the unwritten rule for any CV model going into production is to have over 94% mAP.

.png)

The network would label a 'viceroy (Nymphalidae)' as a 'monarch (Nymphalidae)', producing a wrong result, but being generally correct in the family name. For the sake of time, we decided it was more important to detect the overall butterfly family rather than its particular species, so that’s where we started.

After model evaluation, we have decided to implement a multistage approach:

- the network would first determine the overall butterfly family - butterflies from the same family look similar to each other and significantly different from butterflies from other families, making it an easy first step in buttefly classification

- the second step is to detect which species it is

- the last would be to determine if the butterfly is male or female

Practise Makes Perfect: On The Importance Of A Dataset (Again)

When you come across a new concept, you read books or watch educational videos to grasp it. If you fail, you ask your friend to explain it to you or you visit a seminar on the topic. In other words, you try to accumulate more information about it to understand it better.

The same goes for neural networks - the better you need one to understand what a monarch butterfly looks like, the more images of monarchs you need to show it. The more data it has looked at, the better accuracy scores will be.

The multistage approach we’ve chosen not only improved the accuracy of the object classification model but also made it possible to analyze the dataset and determine where the network lacked learning data.

For example, a Pieris rapae, or a cabbage butterfly, differs from a Pieris napi, or a green-veined white butterfly, by an extra set of brown dots on its wings. The network would often mix these butterflies up, meaning they looked very similar to the network. We needed to collect more photos of both butterfly species in order to teach the network the difference between them.

Teamwork Makes The Network Work: Real Time Training

Insect enthusiasts are a passionate bunch - they know how to identify a butterfly by the shape of its single wing. They possess knowledge that our classification network dreams of having, so why not bring the two together and form a butterfly-loving alliance the world has never seen before?

Currently, the classification network doesn’t just tell the user the butterfly species, it shows the degree of confidence along with other guesses.

The user can confirm the network’s guess or correct it, thus helping us train it - one butterfly at a time. After running the user feedback system for 3 months, we have collected over 20 thousand images. This data is invaluable to us since the photos are made in real-life conditions (bad weather conditions, at night, etc.) and are marked up by experts.

.png)

If You Stare Into The Abyss For Long, The Abyss Gives You Butterflies

It is worth noting that during this project, we have become butterfly experts ourselves. Looking at insect photos all day long, while essentially educating a virtual child on all the little differences between different wing types, makes one an instant member of the insect enthusiast community. If all else fails, our CV team members can easily find themselves in lepidopterology.

On a serious note, looking through thousands of images of butterflies, be it for dataset markup or analyzing where the network makes the most mistakes, we have delved deep into this project and came out the other end not only with a bunch of butterfly knowledge but a better understanding of how complex image recognition and classification systems work, how to best implement them, how to analyze a large dataset and find its weak points. This project was invaluable for us as an opportunity to research and work with the latest computer vision technologies, work with real-time customer feedback, and it polished our problem-solving skills when working with outdated code.

We continue working on the butterfly AI and will share updates very soon!