Introduction

Handwritten document processing remains one of the most challenging problems in modern document automation. Despite major advances in AI-based document understanding, extracting handwritten text from real-world forms continues to produce inconsistent results.

As organizations increasingly rely on AI to automate document workflows, understanding the real-world capabilities and limitations of modern models becomes critical.

The goal of this benchmark is to provide a practical, data-driven comparison of leading AI models for handwritten form recognition. By evaluating multiple models on a shared set of real, hand-filled scanned forms, this study aims to quantify accuracy, highlight strengths and weaknesses, and expose the trade-offs between performance, reliability, speed, and cost.

Read on to learn:

- Which AI models actually deliver reliable handwritten form extraction, and which ones break down outside of clean demos,

- Why two compact, cost-efficient models outperform larger and more expensive alternatives on real-world handwritten documents,

- The structural and semantic bottlenecks that prevent even the best models from reaching 95%+ business-level accuracy.

The results are intended to help organizations make informed decisions when selecting AI solutions for handwritten document processing in production environments.

IDP Models Benchmark

We are constantly testing large language models for business automation tasks. Check out the latest results.

Benchmark Scope and Evaluation Approach

To evaluate how well modern AI models handle handwritten document extraction in real-world conditions, we designed a benchmark that prioritizes realistic data, practical correctness criteria, and business-relevant metrics rather than synthetic test cases.

Models Evaluated

Seven models were included in the final benchmark:

- Azure

- AWS

- Claude Sonnet

- Gemini 2.5 Flash Lite

- GPT-5 Mini

- Grok 4

Several lighter-weight models were tested during an initial pilot phase but excluded after failing to reliably extract handwritten content from the sample forms.

Test Dataset

The benchmark used 10 real hand-filled forms scanned from paper. The documents varied intentionally across key dimensions:

- Layout structure and field organization

- Handwriting style (block, cursive, mixed)

- Text density and spacing

- Content types such as names, dates, addresses, and numeric identifiers

This design reflects realistic operational document flows rather than curated or simplified examples.

Ground Truth Preparation

For each form, a manual ground truth dataset was created containing the expected values for every evaluated handwritten field. These ground truth values served as the reference for measuring extraction accuracy.

Scoring Methodology

Evaluation was performed at the field level using a two-stage scoring process:

Automated matching

- Case-insensitive similarity comparison based on Levenshtein distance

- Threshold: 80% similarity or higher = correct

Manual adjustments

- Upgraded to correct when output was semantically accurate despite formatting or minor variation

- Downgraded to incorrect when errors affected critical fields such as names, dates, IDs, or phone numbers, even if similarity was high

This hybrid approach reflects real-world requirements, where semantic correctness matters more than raw text similarity.

Aggregation Logic

Each field was scored as correct or incorrect.

For every model and document, accuracy was calculated as:

Number of correct fields ÷ total evaluated fields

This evaluation framework prioritizes practical usability and mirrors how handwritten document automation systems are judged in production environments, not by approximate matches, but by whether extracted data can actually be trusted.

Benchmark: Best LLM For RFQ Data Extraction

General Benchmark Results

The benchmark revealed significant differences in how modern AI models handle handwritten form recognition. While some models approached production-ready accuracy, others struggled with even moderately complex handwriting. Understanding these results helps businesses identify which AI solutions are suitable for real-world document processing.

Overall Accuracy Trends

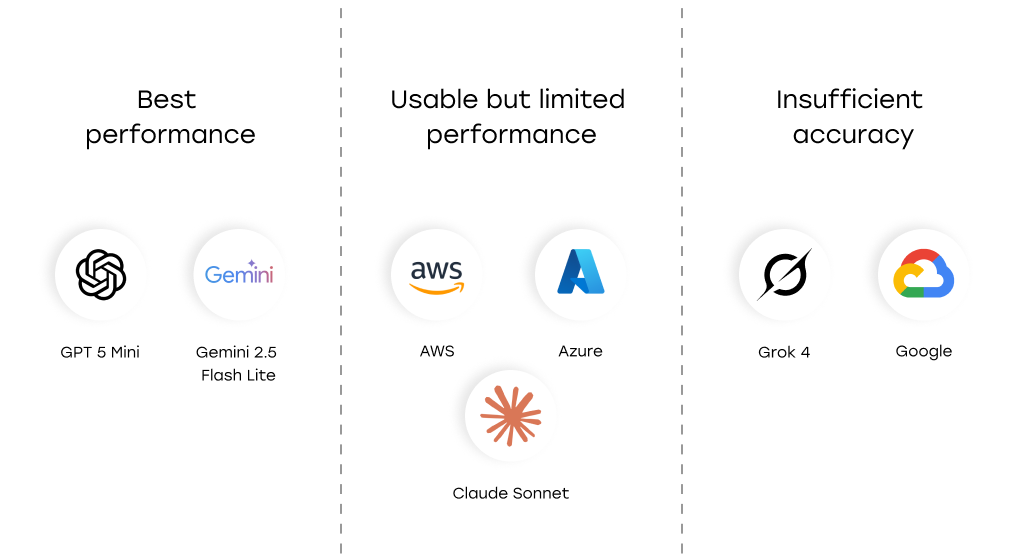

Across all evaluated forms, two models — GPT-5 Mini and Gemini 2.5 Flash Lite — clearly outperformed the rest, consistently achieving the highest document-level accuracy. Both models demonstrated strong performance on diverse handwriting styles, layouts, and field types, making them the most reliable options for critical workflows where errors are costly.

Moderate performers included Azure, AWS, and Claude Sonnet, which delivered acceptable results on clearly structured or block-style handwriting but were less consistent on dense, cursive, or irregularly formatted forms. Their performance often varied from document to document, reflecting sensitivity to handwriting quality and layout complexity.

The remaining models, Google and Grok 4, were unable to deliver sufficient accuracy for practical use. They frequently omitted fields, misrecognized characters, or misinterpreted cursive handwriting, making them unsuitable for real-world handwritten form processing.

Key Accuracy Insights

|

Model |

Average Accuracy |

Performance Summary |

|---|---|---|

|

GPT-5 Mini |

Highest |

Consistently accurate across all document types; minor formatting errors only |

|

Gemini 2.5 Flash Lite |

Nearly identical to GPT-5 Mini |

Excellent performance; slightly sensitive to very dense layouts |

|

Azure |

Moderate |

Reliable on clear block handwriting; struggles with cursive |

|

AWS |

Moderate |

Similar to Azure; accuracy fluctuates between documents |

|

Claude Sonnet |

Mid-range |

Handles some complex forms; inconsistent overall |

|

|

Low |

Performs well only on clean, structured forms |

|

Grok 4 |

Lowest |

Frequent omissions and misrecognitions; unsuitable for production use |

These results highlight that achieving high accuracy on handwritten forms is inherently challenging. Even the best models rarely exceed 95% business-level accuracy, due to the complexity and variability of real-world handwriting.

Takeaways

- GPT-5 Mini and Gemini Flash Lite are the top choices for organizations requiring reliable, production-grade handwritten form extraction.

- Azure, AWS, and Claude Sonnet may be suitable for low-to-moderate complexity forms, but caution is advised for high-stakes fields like names, IDs, and dates.

- Google and Grok 4 are not recommended for critical handwritten OCR applications at this time.

Model-by-Model Analysis

While the general benchmark results provide an overview of accuracy trends, a closer look at each model reveals important differences in strengths, weaknesses, and practical usability. Understanding these nuances is essential when choosing an AI solution for handwritten form processing.

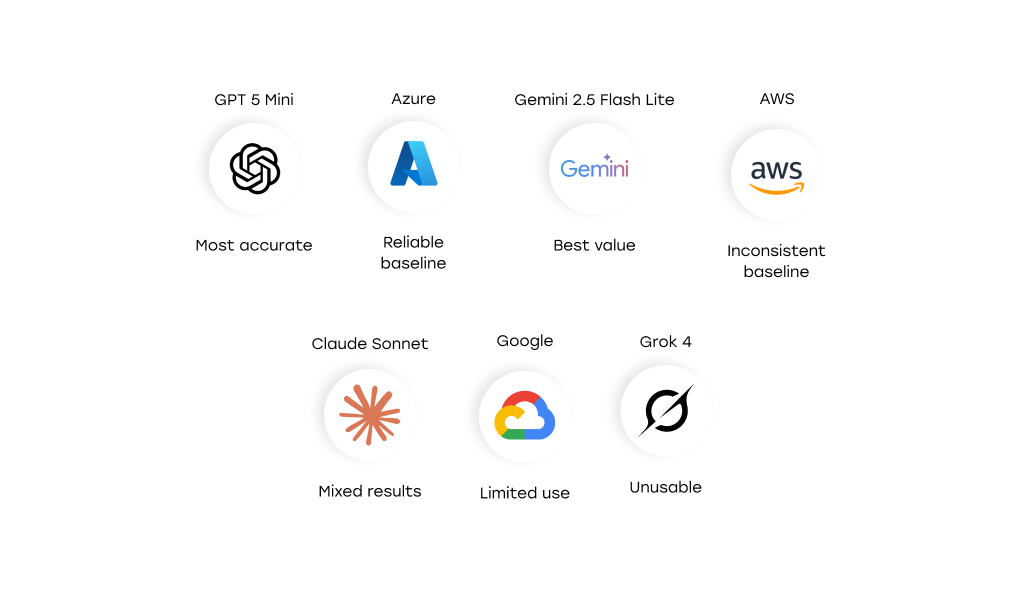

GPT-5 Mini: Best Overall Performance

Accuracy: Highest across all document types

Strengths:

- Handles cursive, block, and mixed handwriting reliably.

- Performs consistently across simple and complex layouts.

- Minor errors are typically formatting issues (e.g., spacing, capitalization).

Weaknesses:

- Slower processing speed per form (average 32 seconds).

- Slightly higher cost per 1,000 forms ($5,062).

Summary: GPT-5 Mini is the most accurate model for production-level handwritten form recognition. It is ideal when correctness is critical, such as in legal, healthcare, or government documents.

Gemini 2.5 Flash Lite: Excellent Alternative

Accuracy: Nearly identical to GPT-5 Mini

Strengths:

- Near-perfect performance on many forms.

- Fast processing (average 5.5 seconds per form) and extremely low cost ($0.368 per 1,000 forms).

- Reliable on most cursive and block handwriting.

Weaknesses:

- Slightly more sensitive to very dense or irregular layouts compared to GPT-5 Mini.

Summary: Gemini Flash Lite offers a strong balance of accuracy, speed, and cost. It is an excellent alternative to GPT-5 Mini, especially for organizations processing large volumes of forms.

Azure: Solid Baseline Performance

Accuracy: Moderate

Strengths:

- Performs well on clear, structured forms with block-style handwriting.

- Consistent baseline performance for simple forms.

Weaknesses:

- Noticeable degradation on dense cursive or irregular layouts.

- Errors increase when fields are close together or overlapping.

Summary: Azure is a reliable general-purpose solution but may struggle with complex handwriting. It is best suited for moderate complexity workflows.

AWS: Comparable to Azure

Accuracy: Moderate

Strengths:

- Similar performance to Azure on readable forms.

- Slightly faster processing per form (average 4.8 seconds).

Weaknesses:

- Accuracy fluctuates more between forms, particularly on dense or overlapping handwriting.

Summary: AWS is suitable for moderately complex handwritten documents but may require additional post-processing or manual review for critical fields.

Claude Sonnet: Mid-Range Reliability

Accuracy: Average

Strengths:

- Can handle some complex forms and irregular layouts.

- Occasionally detects cursive handwriting correctly where other mid-tier models fail.

Weaknesses:

- Inconsistent accuracy across forms.

- Errors in critical fields may make outputs less reliable for production use.

Summary: Claude Sonnet is acceptable for non-critical workflows but may not meet high business accuracy requirements.

Google: Limited Usability

Accuracy: Low

Strengths:

- Performs well on clean, clearly printed handwriting.

Weaknesses:

- Struggles significantly with cursive, dense, or irregular layouts.

- Frequent misrecognitions in semantically important fields (names, dates, numbers).

Summary: Google’s model is not suitable for challenging handwritten forms in its current configuration. It may work for simple documents but is unreliable for production use.

Grok 4: Unsuitable for Production

Accuracy: Lowest across all models

Strengths:

- Minimal; occasionally detects short, clear block handwriting correctly.

Weaknesses:

- Frequent omissions, misrecognitions, and merged fields.

- Fails on both cursive and structured handwriting.

- Extremely slow processing (average 129 seconds per form).

Summary: Grok 4 is not recommended for any real-world handwritten OCR workflows due to low accuracy and performance limitations.

Benchmark: Best AI Models For Engineering Drawing Processing

Factors Influencing Handwritten OCR Model Performance

Model performance on handwritten forms is driven less by the language model itself and more by structural, visual, and semantic properties of the input. The benchmark highlights a consistent set of factors that either improve or degrade extraction accuracy.

Factors That Improve Model Performance

- Clear and consistent handwriting: Block-style letters with stable shapes, consistent spacing, and uniform size significantly reduce character-level ambiguity;

- Well-defined field boundaries: Text that stays within clearly bounded fields and does not overlap printed labels or form lines allows models to segment content accurately;

- Low layout density: Layouts with sufficient spacing between fields and minimal overlap reduce segmentation complexity;

- Short, unambiguous values: Fields containing concise values such as names, dates, or numeric entries are easier to recognize and validate.

Factors That Degrade Model Performance

Accuracy drops sharply when handwriting, layout, or semantics introduce ambiguity or structural complexity.

- High handwriting variability: Mixed writing styles (cursive, semi-cursive, block), inconsistent letter shapes, and connected strokes increase character-level confusion. Loops, ligatures, and overlapping strokes are particularly difficult to interpret reliably.

- Dense or irregular layouts: When handwritten text extends beyond intended boundaries, overlaps printed elements, or lacks clearly defined field names, segmentation errors increase. Models may merge adjacent fields, attach neighboring text fragments, or skip partially obscured characters.

- Multi-line and misaligned text: Irregular line alignment and values spanning multiple lines introduce ambiguity in text grouping and ordering, often leading to swapped or truncated outputs.

- Visually similar characters: Handwritten capital letters and certain numeric characters frequently resemble each other, producing errors that are small in appearance but critical in meaning.

- Semantic sensitivity of fields: Fields that require exact correctness, such as names, addresses, dates, or identifiers, magnify the impact of even minor recognition mistakes.

Systemic Constraints Limiting Maximum Accuracy

Even the top-performing models (GPT-5 Mini and Gemini Flash Lite) struggled to achieve 95%+ accuracy due to several inherent challenges:

- Real-world handwriting contains noise that is not uniform or predictable,

- Forms combine human handwriting with complex printed templates,

- Many fields require exact correctness, where seemingly small mistakes are unacceptable,

- Recognition must handle irregular line alignment, mixed writing styles, cross-field dependencies,

- Models are penalized not only for text errors, but also for incorrect segmentation, missed fields, swapped line fragments.

The performance gap is not only due to OCR errors themselves, it is also driven by task-level strictness and real-world business tolerance thresholds.

Performance and Cost Comparison

When selecting an AI model for handwritten form recognition, accuracy, speed, and cost must all be considered.

Cost comparison

|

Model |

Average cost per 1000 forms |

|---|---|

|

Azure |

$10 |

|

Aws |

$65 |

|

|

$30 |

|

Claude Sonnet |

$18.7 |

|

Gemini 2.5 Flash Lite |

$0.37 |

|

GPT 5 Mini |

$5.06 |

|

Grok 4 |

$11.5 |

Performance comparison

|

Model |

Average processing time per form, s |

|

Azure |

6.588 |

|

Aws |

4.845 |

|

|

5.633 |

|

Claude Sonnet |

15.488 |

|

Gemini 2.5 Flash Lite |

5.484 |

|

GPT 5 Mini |

32.179 |

|

Grok 4 |

129.257 |

Gemini 2.5 Flash Lite offers the best balance, processing forms in just over five seconds at only $0.37 per 1,000 forms while delivering near-perfect accuracy. GPT-5 Mini achieves the highest accuracy, including on complex forms, but is slower (32 seconds per form) and more expensive (~$5 per 1,000 forms), making it ideal for mission-critical workflows.

Azure and AWS provide moderate accuracy on simple, structured handwriting, with faster processing times (4-7 seconds) and higher costs per 1000 forms. Claude Sonnet is slower and less consistent, while Google and Grok 4 fail to meet production-level reliability for most real-world forms.

In short, Gemini Flash Lite is the top choice for high-volume, cost-sensitive operations, GPT-5 Mini for maximum accuracy, and Azure or AWS for moderate workloads.

Conclusion & Recommendations

Handwritten form recognition remains a complex AI challenge. Variations in handwriting style, dense layouts, and the need for precise extraction make consistent accuracy difficult, even for advanced models.

Our benchmark shows clear differences in model performance, highlighting the importance of aligning AI choice with business priorities. GPT-5 Mini leads in accuracy, performing reliably across both simple and complex forms. It is the ideal solution for workflows where precision is critical, such as healthcare, legal, or government documentation.

Gemini 2.5 Flash Lite delivers nearly the same accuracy as GPT-5 Mini but at a fraction of the cost and with faster processing. This makes it well-suited for high-volume operations where efficiency is as important as accuracy.

For organizations with moderate complexity forms, Azure and AWS provide a reasonable balance of accuracy and speed, particularly for well-structured, block-style handwriting. Claude Sonnet can handle some irregular handwriting but remains inconsistent, while Google and Grok 4 are generally not suitable for production-level handwritten OCR.

In practice, businesses should choose models based on their priorities:

- Accuracy-critical workflows: GPT-5 Mini

- High-volume, cost-sensitive operations: Gemini 2.5 Flash Lite

- Moderate workloads with simpler forms: Azure or AWS

By understanding these trade-offs, organizations can ensure that their AI-powered form recognition meets both operational efficiency and quality standards.